Unraveling the Enigma: Exploring the Hidden Markov Model

Introduction

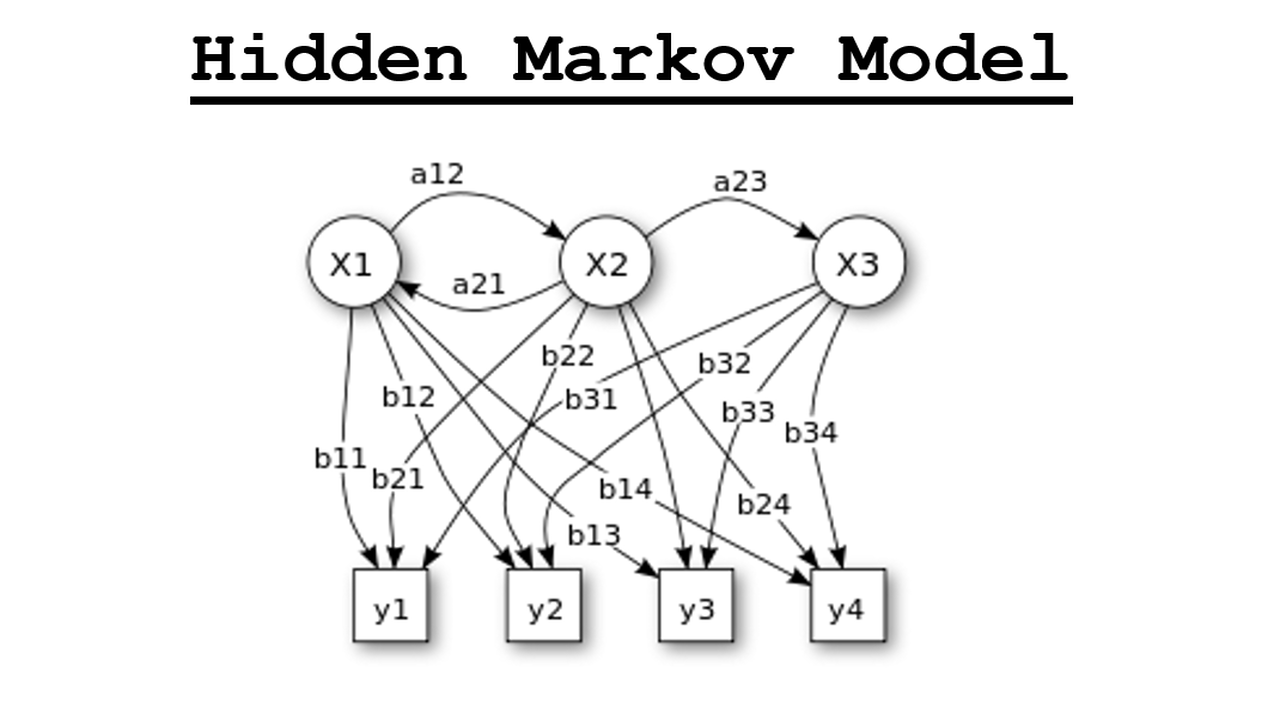

Hidden Markov Model is a probabilistic statistical model in machine learning which is used to describe the probability between a sequence of hidden and observed states. It is mainly used for prediction and classification.

Fig 1: Hidden Markov Model

Terminology Decoded

Model:

It is a machine learning model that uses a dataset as its training dataset. A model's primary function in machine learning is to do a desired task by taking a dataset as a reference and preparing it as a ready-to-use algorithm so that when subsequent datasets are used, it will produce the proper output that it learned using the training dataset. A model might include several algorithms and employs the concept of learning. A model is employed for this purpose.

Hidden States:

Observed States:

Observed states are variables that store the characteristics that are present in that dataset and may be directly measured. The observed states have an impact on the hidden states.

Fig 2: Hidden States and Observation States

Transition Probability:

Emission Probability:

Emission probability is a metric that tracks the possibility of each hidden state producing a certain combination of visible states. It measures the likelihood (value) of viewing specific states by analyzing and comprehending the links between concealed and visible states.

Fig 3: Transition and Emission Probabilities

Explanation

- Labelled Dataset: First it begins with a labelled dataset in which each data point has an associated label. The HMM is trained using this dataset. This is also called as training data. The HMM examines the labelled dataset to determine the elements that impact or differentiate the labels. It seeks patterns and connections between the factors and the labels.

- Observed States: Factors that can be measured or observed directly are saved as observed states. Variables that capture the important measurements or attributes of the data points are often used to represent these observed states.

- Hidden States: The observed states are utilized to figure out what the hidden states are. The underlying variables or phenomena that generate the seen data are represented by the hidden states. The labels or discrepancies between the labels in the dataset are caused by hidden states. They are not directly quantifiable and are saved using the label name or another representation.

- Transition Probability: The transition probabilities explain the possibility of a concealed state shifting to another. These probabilities capture the dynamics or transitions between hidden states in an observation sequence. They are determined by analyzing the labelled dataset.

- Emission Probability: Given a concealed state, the emission probabilities describe the likelihood of witnessing specific outputs or measurements. They represent the link between the concealed and observable states. These probabilities are also calculated using the labelled dataset analysis. Here measurements or observed data are outputs.

- Model Set-up: Once the transition and emission probabilities are calculated, a model is built around them. The training data is used by the HMM to learn the patterns and correlations between the hidden and seen states. This trained model can then be used to predict and classify new, previously unseen data.

Algorithm for Hidden Markov Model

1) Define the observation space and the state space

- State Space: This is the set of all potential hidden states, which represent the system's underlying components or phenomena.

- Observation Space: This is the set of all conceivable observations that can be measured or witnessed directly.

2) Define the Initial State Distribution

3) Define the State Transition Probabilities

These probabilities describe the chances of transitioning from one hidden state to another. It forms a transition matrix that captures the probability of moving between states.

4) Define the Observation Probabilities

These probabilities describe the possibility of each observation being generated from each concealed state. It generates an emission matrix that describes the likelihood of generating each observation from each state.

5) Train the Model

6) Decode the Sequence of Hidden States

7) Evaluate the Model

Example of Hidden Markov Model

1) Establish the observation and state spaces

- State Space: Let's pretend we have three hidden states: "sunny," "cloudy," and "rainy." These are the many weather situations.

- Observation Space: In our case, the observations are "umbrella" and "sunglasses." These are the observable signs that can be used to forecast the weather.

2) Define the Initial State Distribution

3) Determine the Probabilities of State Transition

Step 4: Define the Probabilities of Observation

Step 5: Develop the Model

Step 6: Decode the Hidden State Sequence

Step 7: Assess the Model

Investigating the Person Behind the Blog

If you want to discover more about me and my experience, please visit click on the below link to go to About Me section. More information about my history, interests, and the aim of my site may be found there.

I'm happy to share more useful stuff with you in the future, so stay tuned! Please do not hesitate to contact me if you have any queries or would like to connect.

Thank you for your continued support and for being a part of this incredible blogging community!"

No comments:

Post a Comment